The Model Context Protocol (MCP) is an open standard that connects AI models to your tools, databases, and services in a secure, controlled way. Think of it as a translator that helps AI understand and work with your systems.

AI models are powerful but often lack access to real-world data. MCP solves this by creating a secure bridge between AI and your existing tech, without giving up control over your data.

It follows a simple client-server model: AI apps are the clients, and your tools are the servers—keeping you in charge while enabling smarter AI.

In the Model Context Protocol (MCP) architecture, the MCP server is the central component that connects your AI assistant to the tools and services it needs to be useful — securely and with full control. Think of it as an intelligent middleware that not only shares information but can also perform actions on your behalf.

Unlike a traditional API that just sends data back and forth, the MCP server understands contextual AI needs. It acts as:

A secure translator – interpreting requests from the AI and mapping them to the right API calls.

A gatekeeper – enforcing access rules so sensitive data and actions are controlled.

A context engine – enriching responses with relevant metadata or summaries tailored to the AI model.

An action executor – performing real-world tasks like creating tickets or updating documents.

While the Model Context Protocol (MCP) is gaining traction as a standard for connecting AI models to external systems, many teams using Atlassian products like Jira and Confluence might be wondering how this applies to their environment — especially if they’re running Data Center or self-hosted instances.

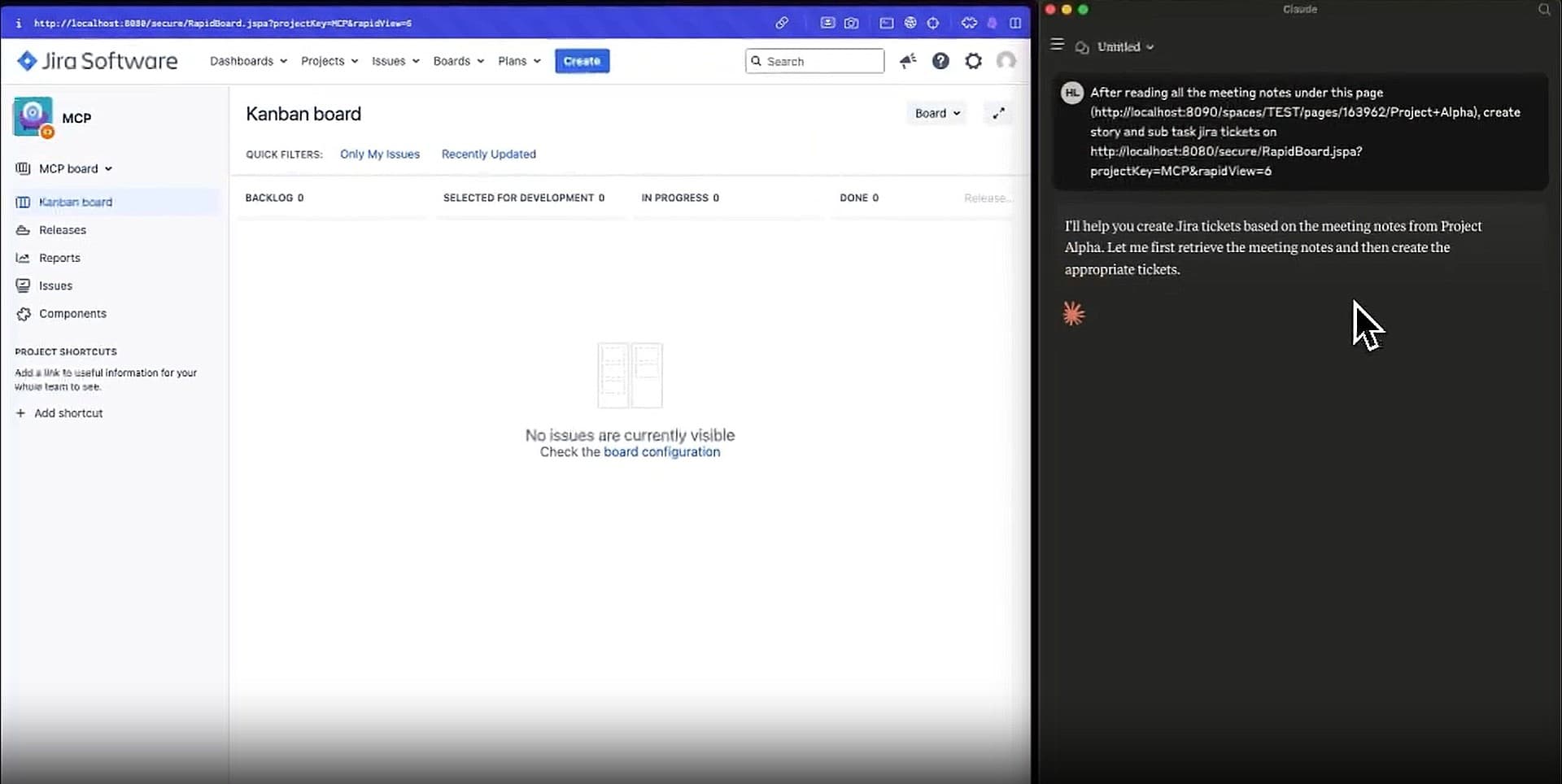

Currently, Atlassian Cloud is beginning to support integrations with AI platforms through hosted MCP servers (Anthropic ’s Claude integrations with Jira Cloud and Confluence). These integrations enable AI models to perform powerful tasks like summarizing Jira work items or Confluence pages, creating issues or pages directly from within the AI interface, taking bulk actions (like generating multiple issues at once), and enriching Jira items with relevant context pulled from various sources the model can access. However, these advanced capabilities are currently limited to Atlassian Cloud environments and are not yet available for self-managed (on-premise) Atlassian products.

Atlassian Cloud customers (Free/Standard/Premium/Enterprise) can access the Remote MCP Server; rate limits vary by plan (e.g., 500–1000 calls/hour plus extra per user).

For teams on self-managed Jira or Confluence (Server or Data Center), the official Remote MCP Server is not available. That said, the open-source community has created powerful alternatives: for example, the mcp‑atlassian project (by sooperset) is a Docker/Python-based MCP server supporting both Atlassian Cloud and Server/Data Center deployments (Jira 8.14+/Confluence 6.0+) Another implementation by phuc‑nt also offers rich support for Server/DC environments, including Jira workflows, Confluence pages, attachments, and agile features

If you're using Jira Software Data Center or Confluence Server, there's no official MCP server that can act as the bridge between your AI model and your internal Atlassian setup. But that doesn’t mean it can’t be done.

The architecture of MCP is flexible — it allows you to implement your own MCP server tailored to your environment.

The key benefit of using your own MCP server is control — you define what the AI can access, what actions it can perform, and under what conditions.

As there is no official MCP server support for Atlassian Data Center products, you can build your own. If you have some experience with Atlassian’s REST APIs (for Jira, Confluence, etc.), building an MCP-compatible server is technically feasible. It involves:

Implementing endpoints that conform to the MCP spec

Translating AI-generated intents into API requests (e.g., "create issue", "search page")

Handling authentication and access control internally

Ensuring data is filtered or formatted in a way that's useful for language models

Typical tech stacks include Java with Spring Boot, Node.js, or any framework you're comfortable with for building API services.

With your Jira MCP server properly configured, you can leverage AI to perform various tasks:

Creating Issues: "Create a bug ticket for the authentication service with high priority"

Updating Issues: "Change the status of PROJ-123 to 'In Progress' and assign it to John"

Searching Issues: "Find all critical bugs assigned to me that are still open"

Sprint Status: "Give me a summary of the current sprint's progress"

Project Metrics: "Show me the burndown chart for the current sprint"

Workload Analysis: "Who has the most open tickets in the development team?"

Issue Transitions: "Move all completed tickets to the 'Done' status"

Bulk Updates: "Add the 'frontend' label to all issues related to UI components"

Worklog Management: "Log 2 hours of work on ticket PROJ-456 for yesterday"

Slack to Jira: "Create tickets for all action items mentioned in today's planning meeting"

Documentation Sync: "Update the Confluence page with the latest requirements discussed in #project-alpha"

Status Updates: "Post a summary of completed sprint tickets to #team-updates channel"